Welcome to the Fifth Summer School on Statistical Methods for Linguistics and Psychology, 6-10 September 2021

Application, dates, location

- Dates: 6-10 September 2021.

- Times: The summer school is being taught completely online, the times will be decided closer to September.

- Location: The course will be taught virtually during this week, using pre-recorded videos, moodle, and Zoom for daily real-time meetings. For the Introductory courses, the recorded lectures will be available for free online to everyone (we will decide about the other courses later; watch this space).

- Application period: 16 Jan to 15 April 2021. Applications are closed; there were 633 applications. Decisions will be announced by 10th May 2021.

Brief history of the summer school, and motivation

The SMLP summer school was started by Shravan Vasishth in 2017, as part of a methods project funded within the SFB 1287. The summer school aims to fill a gap in statistics education, specifically within the fields of linguistics and psychology. One goal of the summer school is to provide comprehensive training in the theory and application of statistics, with a special focus on the linear mixed model. Another major goal is to make Bayesian data analysis a standard part of the toolkit for the linguistics and psychology researcher. Over time, the summer school has evolved to have at least four parallel streams: beginning and advanced courses in frequentist and Bayesian statistics. These may be expanded to more parallel sessions in future editions. We typically admit a total of 120 participants (in 2019, we had some 450 applications). In addition to the all-day courses, we regularly invite speakers to give lectures on important current issues relating to statistics. Previous editions of the summer school: 2020, 2019, 2018, 2017.Code of conduct

All participants will be expected to follow the (code of conduct, taken from StanCon 2018. In case a participant has any concerns, please contact any of the following instructors: Audrey Bürki, Anna Laurinavichyute, Shravan Vasishth, Bruno Nicenboim, or Reinhold Kliegl.Invited lecturers

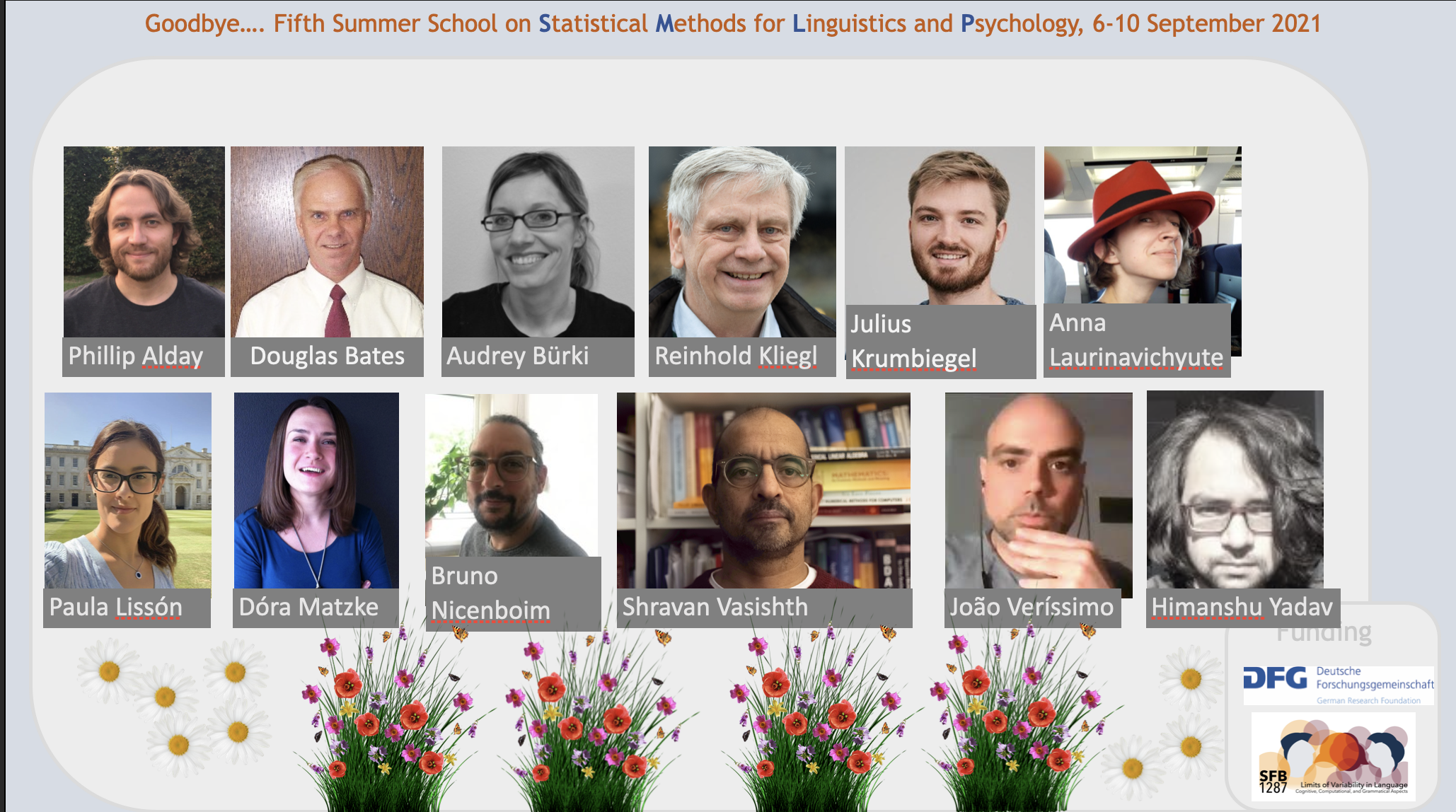

- Douglas Bates (co-instructor for Advanced methods in frequentist statistics with Julia)

- Phillip Alday (Advanced methods in frequentist statistics with Julia)

- João Veríssimo (Foundational methods in frequentist statistics)

Invited keynote speakers

- Dóra Erbé-Matzke:

Title: Flexible cognitive architectures for response inhibition Date: 7th Sept 2021, 5-6PM CEST.

Video recording: The network crashed mid-recording, so there are two parts to the keynote:

Abstract: Imagine you driving down the highway stuck behind a slow car. You glance in the rear-view mirror to check if it is safe to overtake, but before you do so, you hear the siren of an ambulance and abort the overtaking manoeuvre. This type of response inhibition—the ability to stop ongoing responses that have become no longer appropriate—is a central component of executive control and is essential for safe and effective interaction with an ever-changing and often unpredictable world. Inhibitory ability is typically quantified by the stop-signal reaction time, the completion time of an inhibitory process triggered by a signal to stop responding. Because stop-signal reaction times cannot be directly observed, they must be inferred based on a model in which independent inhibitory (“stop”') and response (“go”) processes race with each other to control behavior. I review the limitations of the traditional non-parametric race model framework and show that it cannot be used to investigate response inhibition in the full range of situations and paradigms that are relevant to the study of cognitive control. To address this shortcoming, I outline a flexible parametric approach that generalizes the race model to account for aspects of behavior that are characteristics of real-world stopping, such as choice errors, attentional lapses, and the interaction between the stop and go processes. I propose various parametrizations of the framework, ranging from the descriptive ex-Gaussian distribution to a racing diffusion evidence-accumulation architecture, explore the strengths and weaknesses of the different models, and illustrate their utility with clinical and experimental data in choice-based as well as anticipated-response-based paradigms. I end with discussing the potential of this modeling framework to provide a comprehensive account of the mental processes governing behavior in realistically complex situations, and how it may contribute to the prediction of stopping performance in dynamic settings. - Phillip Alday:

Title: Putting the NO in ANOVA: the past, present and future (role) of statistics in linguistics and psychology

Date: 9th Sept 2021, 5-6PM CEST.

Video recording of the talk:

Abstract: Language science and psychological science have an uneasy relationship with statistics, both as a tool for dealing with data and a tool for building theories. In this talk, I will discuss the historical disappointments and resulting prejudices against statistics as well as critical insights made possible by statistics, before discussing the current state of the field. In particular, I will discuss what modern, computer-age statistical practice -- such as mixed-effects models, Bayesian statistics and machine learning -- has to offer compared to traditional tools and why it's time to use tools that Clark and Cohen could have only dreamed of. In this vein, I will highlight that classical tools were often insufficient even in their heyday and that the increased complexity of modern tools simply reflect the complexity of the underlying data, which we can no longer responsibly ignore. I will focus on modern statistical approaches as a tool for theory building -- both for theories with stochastic and variable components and as a tool for handling messy and variable real-world data -- instead of as an alternative to theory building. For example, I will touch upon how modern approaches allow for an integrative notion of intra- and interindividual variation, thus addressing an inherent data complexity and theoretical concern and allowing for nearly every study to be an individual-differences study. Finally, I will conclude with my vision for (the role of) statistics in the future of psychology and linguistics and provide a few suggestions for how to achieve that vision.

Curriculum and schedule

Social hour interface: All participants will be meeting Monday and Wednesday 5-6PM CEST on wonder.me. The link is here. The password will be sent to participants within each stream.We offer foundational/introductory and advanced courses in Bayesian and frequentist statistics. When applying, participants are expected to choose only one stream.

- Introduction to Bayesian data analysis (maximum 30 participants). Taught by Shravan Vasishth, assisted by Anna Laurinavichyute, and Paula Lissón This course is an introduction to Bayesian modeling, oriented towards linguists and psychologists. Topics to be covered: Introduction to Bayesian data analysis, Linear Modeling, Hierarchical Models. We will cover these topics within the context of an applied Bayesian workflow that includes exploratory data analysis, model fitting, and model checking using simulation. Participants are expected to be familiar with R, and must have some experience in data analysis, particularly with the R library lme4.

- Advanced Bayesian data analysis (maximum 30 participants). Taught by Bruno Nicenboim, assisted by Himanshu Yadav This course assumes that participants have some experience in Bayesian modeling already using brms and want to transition to Stan to learn more advanced methods and start building simple computational cognitive models. Participants should have worked through or be familiar with the material in the first five chapters of our book draft: Introduction to Bayesian Data Analysis for Cognitive Science. In this course, we will cover Parts III to V of our book draft: model comparison using Bayes factors and k-fold cross validation, introduction and relatively advanced models with Stan, and simple computational cognitive models.

- Foundational methods in frequentist statistics (maximum 30 participants). Taught by Audrey Buerki and João Veríssimo, video recordings by Shravan Vasishth. Participants will be expected to have used linear mixed models before, to the level of the textbook by Winter (2019, Statistics for Linguists), and want to acquire a deeper knowledge of frequentist foundations, and understand the linear mixed modeling framework more deeply. Participants are also expected to have fit multiple regressions. We will cover model selection, contrast coding, with a heavy emphasis on simulations to compute power and to understand what the model implies. We will work on (at least some of) the participants' own datasets. This course is not appropriate for researchers new to R or to frequentist statistics.

- Advanced methods in frequentist statistics with Julia (maximum 30 participants). Taught by Phillip Alday, Douglas Bates, and Reinhold Kliegl.

Course Materials Course web page: all materials (videos etc.) will be available here.

Textbook: here. We will work through the first six chapters.

Videos from previous editions: available here.

Course Materials Textbook here. We will start from chapter 7. Participants are expected to be familiar with the first five chapters.

Course Materials Textbook draft here.

Videos: available here.

Course Materials Github repo: here.